My research work on…

Digital Health

Access to this lab is restricted. Please click here to request access.

The Wearonomics Lab is a research lab dedicated to exploring the intersection of wearable technology, health data, and economic empowerment. The focus lies in uncovering how wearable devices can generate meaningful insights while enabling financial incentives for individuals through tokenized ecosystems.

By integrating data from wearables with innovative micro-finance models, the lab aims to create pathways for individuals in underserved regions to access new economic opportunities. The research explores how health and activity data can be leveraged for social good, aligning personal benefits with broader public health and financial goals.

My professional work on…

Prediction

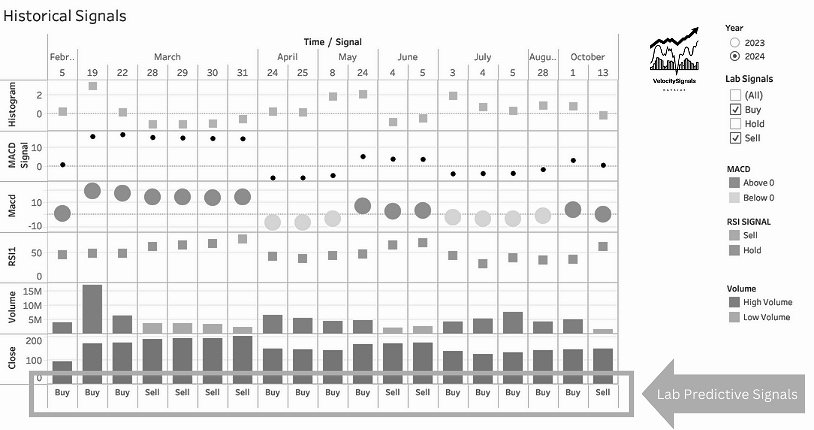

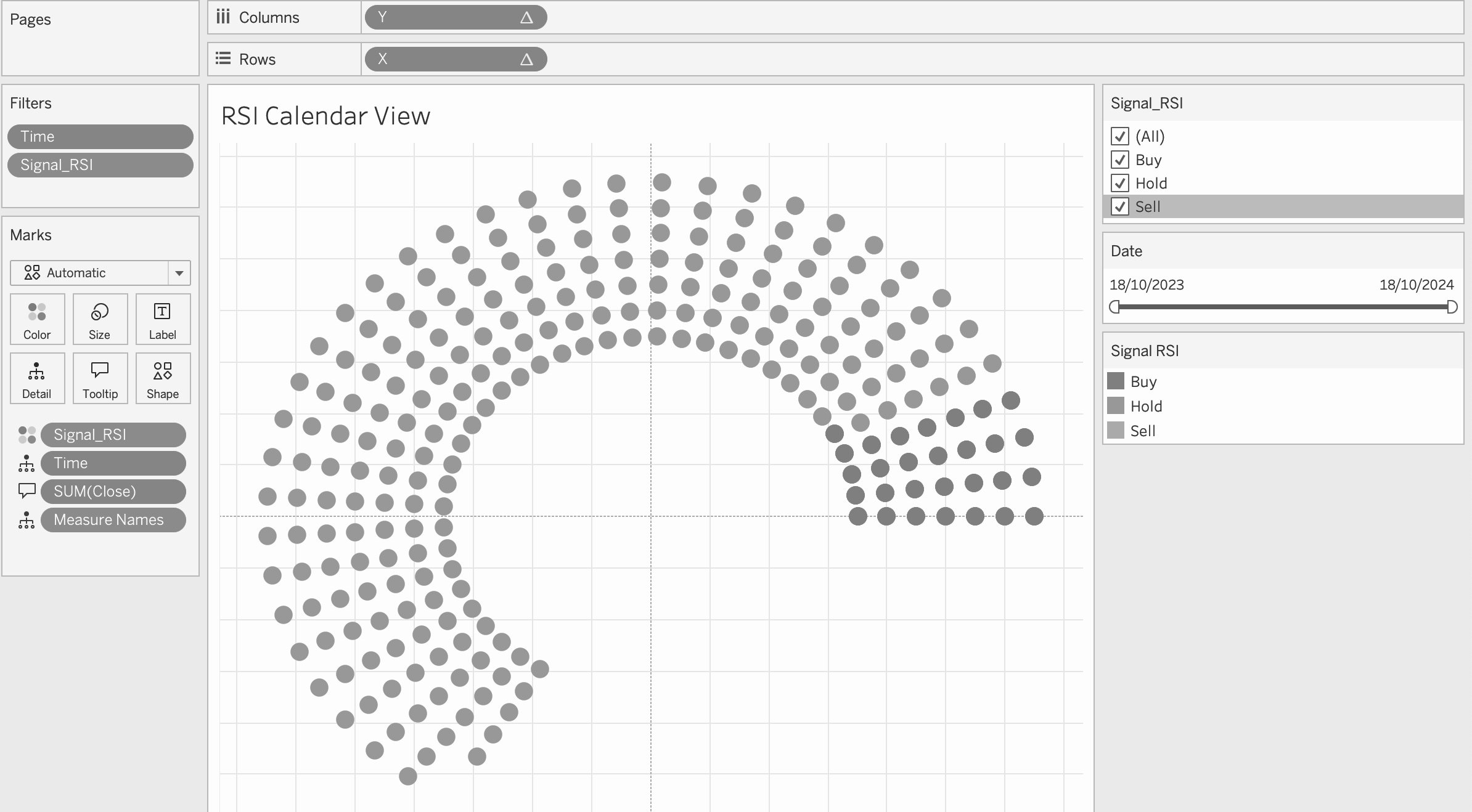

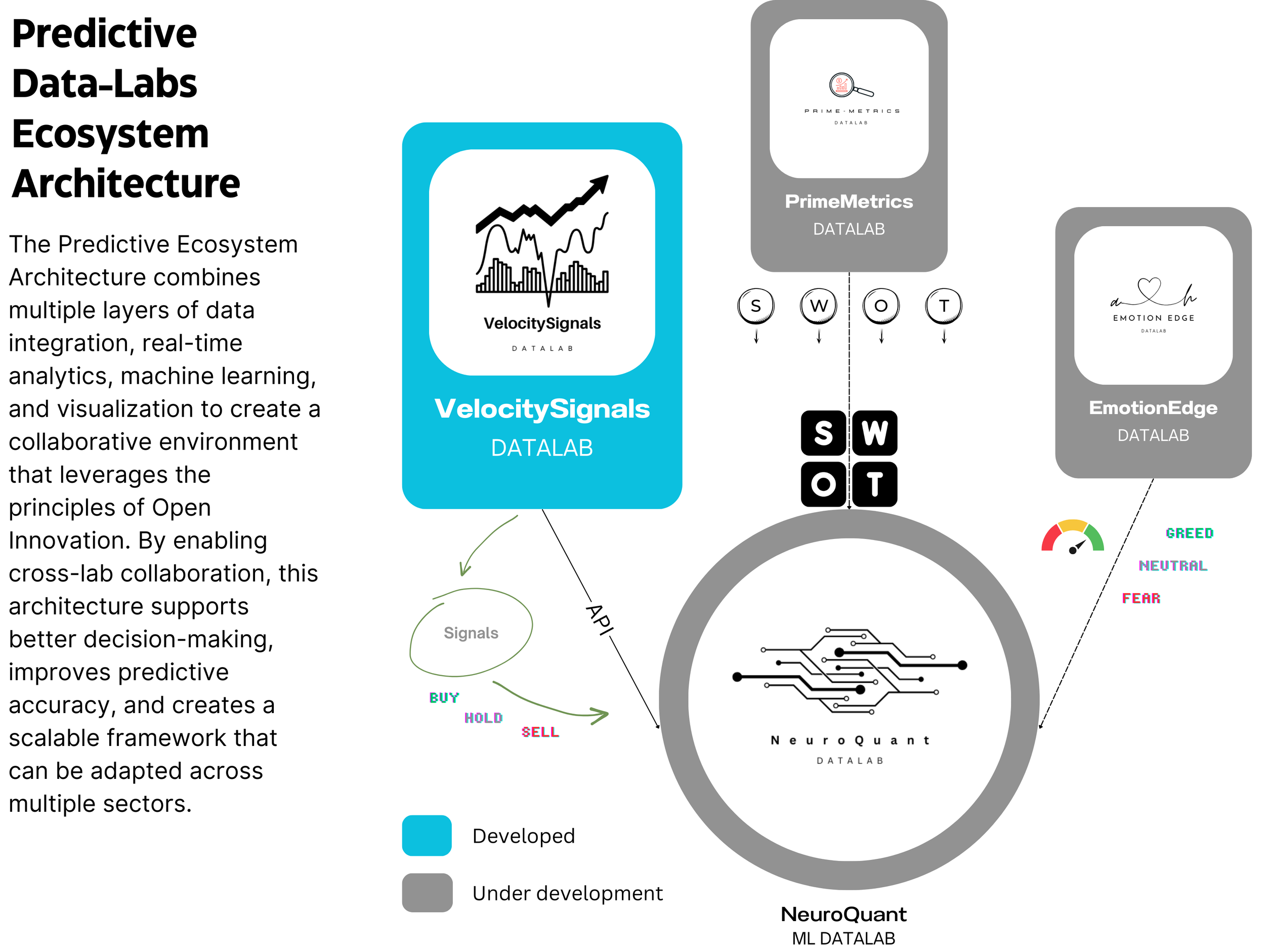

Ocean Capital is a research lab focused on leveraging data science, prediction models, and machine learning to generate Buy, Hold, and Sell signals for financial assets using indicators like RSI, MACD, and trading volume. A portion of the lab’s profits is dedicated to supporting marine conservation efforts, reinforcing my commitment to protecting ocean ecosystems while advancing financial innovation.

How the labs are built…

When it comes to tackling complex through research, I rely on a structured yet flexible approach that allows me to transform raw data into meaningful insights.

Over the years, I’ve honed a 5-step research framework that helps me not only gather and process data efficiently but also apply it to real-world decision-making. Here’s how I do it.

The VelocitySignals Tool, part of Ocean Capital Lab, leverages advanced technical indicators such as RSI, MACD, and trading volume to analyze and predict market movements. This innovative tool generates clear buy, sell, or hold signals using historical datasets, enabling intelligent, data-driven decision-making. With integrated predictive algorithms, the tool anticipates market trends, allowing for quick responses and empowering forward-looking trading strategies.

Read the Technical Notes here.